Tutorial on Deep Learning and NLP

Recent Trends in Machine Learning for NLP: Deep Learning and Spectral Methods

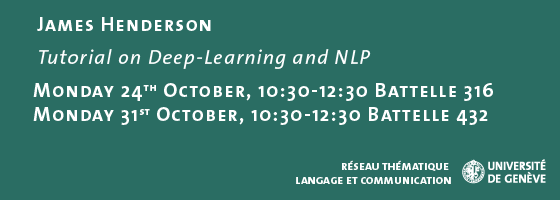

James Henderson

Monday 24th October, 10:30-12:30 Battelle 316

Monday 31st October, 10:30-12:30 Battelle 432

From Facebook's face recognition to AlphaGo beating the best human Go player, Deep Learning is having a transformational impact across all domains in Artificial Intelligence, including Natural Language Processing. This tutorial will introduce the models behind these advances, and survey some of their applications to NLP tasks. The tutorial assumes a basic understanding of natural language processing tasks and methods (tagging, parsing, grammatical derivations, word sparsity, supervised learning, inductive bias, etc.), and will include some of the methods' mathematical foundations, but the focus is on the methods' high-level characteristics and usefulness. This tutorial was developed as training for the NLP group at the European Patent Office, with the help of my colleagues at Xerox Research Centre Europe.

The tutorial will take 2 sessions. The first session introduces the methods of neural networks, deep learning, and spectral methods (including LSTMs (Long-Short-Term-Memories ), Convolution Neural Networks, Incremental Neural Networks and spectral HMMs). These methods all induce latent vector spaces, which support varying degrees of indirectness between the input and output representations of the task. They include models of sequences, tructures, automata, sparse inputs (i.e. word embeddings), and sequence-to-sequence tasks (such as machine translation). The second session will include a variety of NLP tasks: syntactic and semantic parsing, relation extraction, spoken language understanding, lexical semantic entailment, and dialogue state tracking. If time permits we will also talk about sentiment analysis, named entity recognition, and natural language generation.